/首页

/开源

/关于

翻译《深入理解Golang TCP Socket的实现》

发表@2023-06-18 11:03:54

更新@2023-06-18 22:32:50

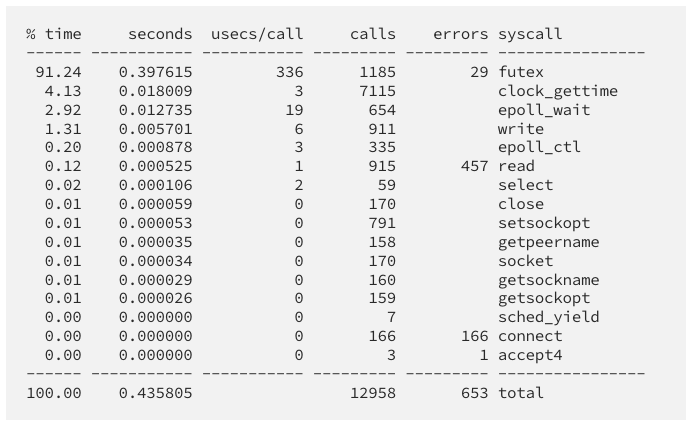

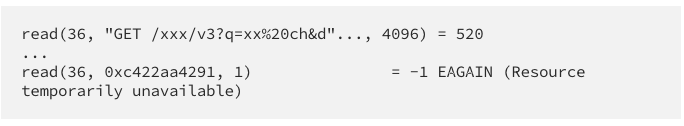

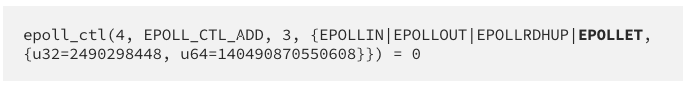

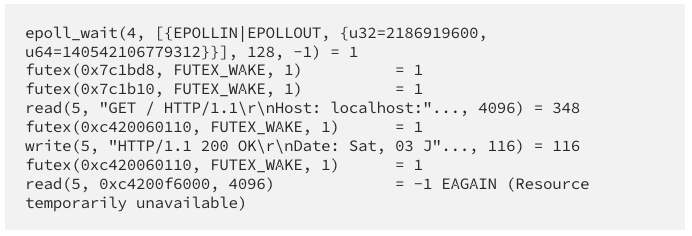

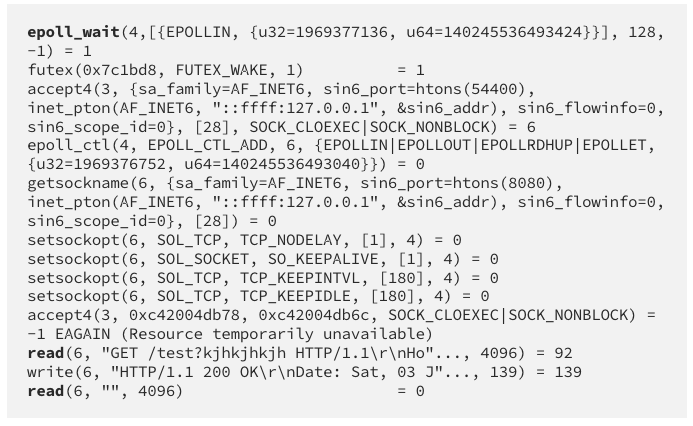

嗨,大家好,我是谢顶道人 -- 老李,今天呢我们来翻译第二篇文章,这篇文章口水含量很低。这篇文章其实就是大概讲了讲关于epoll LT模式下的所谓惊群效应(坦白说应该不叫惊群,就是无谓的唤醒进程/线程),其实ET下应该也是有这个问题的,ET下还额外多了饥饿问题,不过还好都不是什么大问题,瑕不掩瑜。 先来看标题,这个标题就足够我们小喝一壶了。 原文链接:medium.com/@ggiovani/tcp-socket-implementation-on-golang-c38b67c5d8b 标题叫做《Under the hood of TCP Socket Implementation On Golang》,Implementation就是实现的意思,名词,这个容易理解。Hood这个词呢也是个名词,我从扇贝英文上背诵过,是风帽、面罩、学位连领帽(你大学本科毕业时候带的那种玩意)的意思,不过后来我在第六版牛津词典上发现这个词也有敞篷车的敞篷、机器、设备盖子的意思,所以如果我们从表面意思理解the hood of TCP的话,就是“Golang TCP Socket实现的帽子、盖子、篷子”,这样如果是不求甚解的话其想表达的意思大家想必也就清楚了,但是如果对翻译稍微有点儿要求的话,under the hood of sth,就可以翻译为我们常见的“深入理解xxx”了。 Golang is surely my first to go language to write web application, it hides many details but still gives a lot of flexibility. 毫无疑问Golang是我实现一个web应用的首选语言,Golang隐藏了很多实现细节但同时又保留了很大的弹性(我认为译为可操作空间更好)。 Recently I did strace to an http service app, actually did it without a reason but I found something interesting. 最近我使用strace工具分析了一个http服务,坦白说我也不知道我自己为什么要做这个操作,但是这个无心操作让我发现了一些很有意思的东西。 Here’s what strace -c gave me : 下面是通过strace -c显示的内容:  There are a lot of interesting things in that profiling result, but the highlight of this article are the error number of read and the number of futex call. 这个性能分析报告中的不少内容都有点儿意思,但是本文关注「error number of read」与「the number of futex call」。profile有名词、动词两种用法,名词就不说了,动词是扼要介绍、简述简介、概述的意思;profiling是profile的现在分词,在本行业中profiling就有性能分析的意思。 At the first sight I didn’t think about futex call, which most of them are a wake call. I thought that this app serve hundreds req per second, it should has many go routine; At the other hand it also leverage channel, it should has many under the hood blocking mechanism. 一开始我并没有觉得发生这么多次futex系统调用有什么问题,我觉得这些大多数都是唤醒操作。我寻思着服务端每秒钟并发大约几百次,应该会开辟很多goroutine;另一方面,服务端代码中也利用了不少channel,在底层实现中应该用到了阻塞机制。(futex系统调用是Linux特有的为用户层快速实现锁机制提供的系统级API,敬请期待本人公众号高性能API社区中锁系列文章,文章这段意思就是作者认为调用量挺大的,所以futex唤醒操作应该也会很多,应该属于正常现象;leverage有名词、动词两种用法,名词表示杠杆、影响力的意思,动词有利用杠杆作用、施加影响、利用的意思,所以leverage the channel就是利用Golang channel的意思;mechanism是名词机械装备、机制的意思,blocking mechanism是阻塞机制的意思) So a lot of futex call should be normal, later I found out that this number was also from other things -will back to it later-. 所以这么多futex调用次数应该是正常的,然而我发现事情并不是这么简单,这个调用数字中也有其他玩意的贡献,这个我们稍后接着继续讨论。(will back to it later其实前面可能是省略了一个I,其实这句话也简单,就是我们稍后继续的意思) Who’s like error? Worst when it happens hundreds time in less than a minute, that’s what I thought when looking to that profiling result. What the heck with that read call? 又有谁喜欢错误?(这句的语法,我是属实没看懂的...Who后面为什么要有's?)最辣鸡的时候就是一分钟内就能发生上百次错误,我看到strace那个性能分析时就是这么想的。read系统调用到底TM发生什么事了,真TM见了鬼了?(heck,口语,意义等同于hell,但是语气轻于hell,所以这就是有名的What the hell with sth)  After every read call there is (maybe) always one read to the same file descriptor with EAGAIN error. Hey I remember this error, it comes when the file descriptor is not ready to certain operation; in this case read . But Why golang do this way? 在每次read调用完成后之后总是会在相同的文件描述符上再次发生一次read系统调用,同时还伴随EAGAIN错误。我想起来EAGAIN错误了,当一个文件描述符上的可读/可写条件还没有准备好的时候,做读写操作就会发生这个错误。但是Golang为毛要这么做?(EAGAIN错误我就不多说了,这个错误等同于EWOULDBLOCK,高性能API社区里不少关于网络编程的文章都提到过) I thought there was bug from the epoll_wait which gave wrong ready event to every fd? every fd? It looks like double ready rather than wrong event, why double? 我认为是epoll_wait有bug导致将错误的准备好的事件赋给了每一个fd?每一个吗?看起来更像是在同一个fd上发生了两次ready事件,为什么会发生两次? Honestly my experience with epoll is shallow, basic event loop socket handler [0] is the only one. No multi threading, no synchronization, it’s very plain. After googling I found Marek (clever guy) criticizing epoll [1], and it’s like boom!!! 坦白说我其实并不怎么懂epoll,只了解一个基础的socket事件循环句柄(坦白说,作者这里说的handler [0],我也不知道到底是想表达什么),没有多线程、也没有同步机制,简单so easy的那种。然后我就Google到了Marek大佬(一个聪明的家伙)对epoll的各种批判,简直就像炸开锅了一样! Important TL;DR from that post : unnecessary wake up is ‘generally’ unavoidable when using epoll with multi threaded worker, because weneed to notify each worker about waiting ready events. (TL;DR,是英文Too long,didn't read的简称)大佬文章中指出了重要的一点:在多线程中使用epoll的时候,不必要的唤醒是通常无法避免的,因为我们需要把事件ready通知到每一个worker线程。(这里我也搜到了Marek大佬的那篇文章,一番拜读后,Marek的原文大概是说多线程中使用epoll,即便是每个线程都持有同样的那个epoll fd,而且是启用了epoll的水平触发模式的时候,此时epoll会继承select系统调用的惊群问题。大家有兴趣,翻看高性能API社区中一篇文章,就是详解惊群的) Hey that also explain about our futex wake numbers. But lets take a look at simplified version on how to use epoll in event loop socket implementation to further understand it : 这样也同样解释了futex唤醒次数为什么那么高。但是为了能更进一步理解问题,我们一起看一个简单版本的epoll socket事件循环实现: 1、Bind socket listener to file descriptor, lets call it s_fd 1、将监听socket绑定到fd上,称作s_fd(小提示:在纯C下需要先使用socket系统调用创建一个用于通信的fd,然后使用bind系统调用将sock addr绑定到前面那个fd上) 2、Create epoll file descriptor using epoll_create, lets call it e_fd 2、使用epoll_create创建一个epoll fd,称作e_fd(其实Linux下操作epoll的函数只有寥寥数个,epoll_create、epoll_ctl和epoll_wait) 3、Bind s_fd to e_fd using epol_ctl for particular events (usually bothEPOLLIN|EPOLLOUT ) 3、使用epoll_ctl将s_fd上指定的事件绑定到e_fd上(通常是epollin和epollout) 4、Create forever loop (event loop) which call epoll_wait in every iteration to get incoming ready connections fd from binded events 4、创建一个永久的事件循环(别多想,粗暴点儿就是直接for),这个循环每次迭代时候,都会从3中指定的事件中读取进来的已经ready的客户端连接fd 5、Handle the ready connection, in multi worker implementation means notifying each worker 5、在多进程/线程中,操作这些ready的网络连接就意味着要通知每一个worker进程/线程 Using strace I found that golang using edge triggered epoll: 使用strace分析我发现Golang中使用的是epoll的边缘触发模式:  Which means this is also valid on golang socket implementation : 这意味着这些在Golang socket实现中也是同样可用的: 1、Kernel: Receives a new connection. 1、内核:接收到一个新的连接 2、Kernel: Notifies two waiting threads A and B. Due to “thundering herd” behavior with level-triggered notifications kernel must wake up both. 2、内核:通知到两个线程A和B,由于水平触发模式下的惊群问题,内核一定会唤醒A和B 3、Thread A: Finishes epoll_wait(). 3、线程A:完成一次epoll_wait() 4、Thread B: Finishes epoll_wait(). 4、线程B:完成一次epoll_wait() 5、Thread A: Performs accept(), this succeeds. 5、线程A:执行accept系统调用,成功。(perform是动词,执行、运行、表演、表现的意思) 6、Thread B: Performs accept(), this fails with EAGAIN. 6、线程B:执行accept系统调用,失败并返回eagain错误 At this point I am 80% confident this was the case, but let’s do some analysis using this simple web app [2]. Here is what it does on single request. 此时(at this point)我可以80%确定就是这个问题了,但是我们还是用这个简单的web应用再做一些分析。下面是发生一次单次请求时候strace的记录:  Look how after epoll_wait there are two futex wake call which I think is the worker and then there are error read. 仔细观察下在epoll_wait调用完成之后,worker会有两次futex唤醒操作,然后就会继续发生read错误。 How if I set GOMAXPROCS to 1, enforcing single worker behavior. 如果我把GOMAXPROCS设置为1,强制一个worker会发生什么事?(GOMAXPROCS设置为1意味着托管goroutine工作队列的系统级线程只有1个)  When using 1 worker, there is only once futex wake call after epoll_wait and no read error. However I found this behavior is not consistent, in some trial I still got read error and 2 futex wake call. 当使用一个系统级线程后,在epoll_wait()之后只发生了一个futex唤醒调用并没有没有发生read错误。然而我发现这并不是可持续的,在某些测试中还是会偶现两次futex唤醒和read错误。 In the same post [1] Marek talk about EPOLLEXCLUSIVE which available starting from linux version 4.5. I’m using version 4.8 why it’s still happening on my maching? Turns out that golang is not using that flag yet, I hope at the next next version it will support that flag when available. 在Marek另一篇文章中他提到了EPOLLEXCLUSIVE,这个特性是从Linux kernel 4.5之后可用的。我正在使用4.8版本,为啥这个问题还是在我的机器上复现了。这说明Golang还没有启用这个flag,我希望下个版本的Golang中这个标志符是可用的。 I learned a lot from this journey, hope you too. 我从这次旅程中学到了很多,希望你也一样。